I’m wondering if anyone at Acumatica ( especially

I’ve noticed this behavior before, but this is the first time that I got out my stopwatch to time it and verify.

I’m on an Acumatica site, pulling data from a Generic Inquiry over Acumatica OData, using Power BI Desktop. The Generic Inquiry is a Generic Inquiry of AR Invoice Lines, pulling from PX.Objects.AR.ARTran and PX.Objects.AR.ARInvoice. The build is 21.209.0034.

I tried two different methods of accessing the data:

First Method

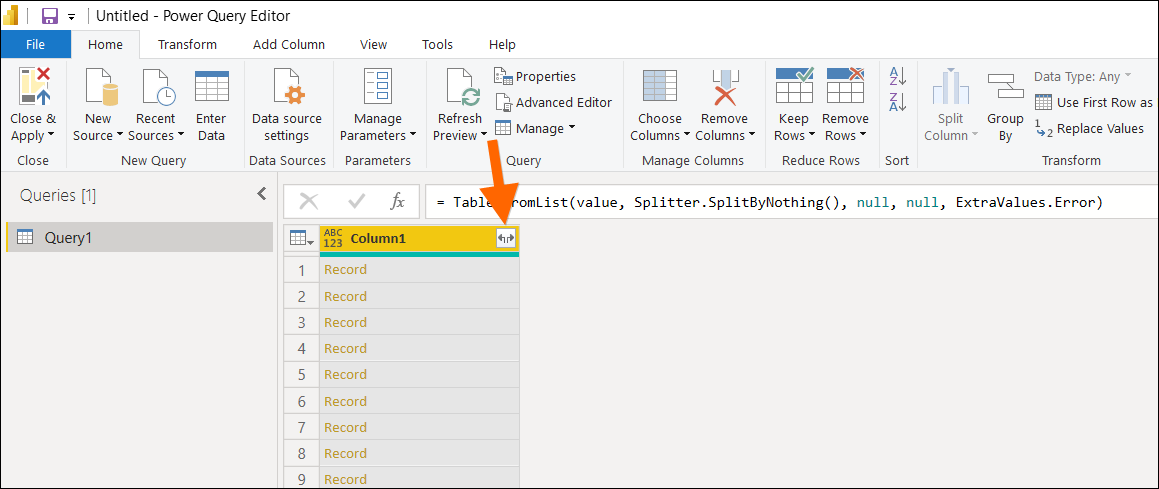

Connect to the Acumatica OData URL, then browse to the Generic Inquiry within the Power BI user interface, then remove all columns except DocType, RefNbr, and LineNbr using the Power BI user interface. The end result is three columns and this is the Power BI code that gets generated.

let

Source = OData.Feed("https://mycompany.acumatica.com/odata/production", null, [Implementation="2.0"]),

#"PBI-ARInvoiceLines_table" = Source{[Name="PBI-ARInvoiceLines",Signature="table"]}[Data],

#"Removed Columns" = Table.RemoveColumns(#"PBI-ARInvoiceLines_table",{"CustomerID", "DocDate", "Item", "NetSalesAmt", "TranType", "ReferenceNbr", "ClassID", "InventoryID"})

in

#"Removed Columns"

Second Method

Connect to the Acumatica OData URL, but specify the columns that I want using the $select parameter within the OData URL. The end result is the same as the First Method, but I can accomplish it using one step in Power BI and here is the Power BI code that gets generated:

let

Source = OData.Feed("https://mycompany.acumatica.com/odata/production/PBI-ARInvoiceLines?$select=DocType,RefNbr,LineNbr", null, [Implementation="2.0"])

in

Source

In the First Method, Acumatica sends more than 3 columns of data over to Power BI, then Power BI removes the columns once they arrive. So, I would think that the Second Method would run faster because Acumatica is only sending 3 columns of data over to Power BI.

But, the Second Method takes longer to refresh in Power BI than the First Method.

The First Method takes about 30 seconds to refresh.

The Second Method takes about 45 seconds to refresh.

FYI, there are about 36,000 records in this Generic Inquiry.

3 questions:

1. Is this kind of behavior expected?

2. Could it be that Acumatica has to do more work when you put $select in the OData URL and that’s why it takes longer?

3. Could the speed of $select be addressed when moving to OData V4 as discussed in this post?